The Polar Bear Telescope consists of 3 camera systems, each comprising

The entire system is mounted rigidly on the Observatory roof.

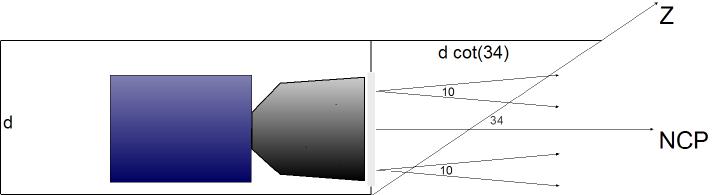

Instrument Schematic

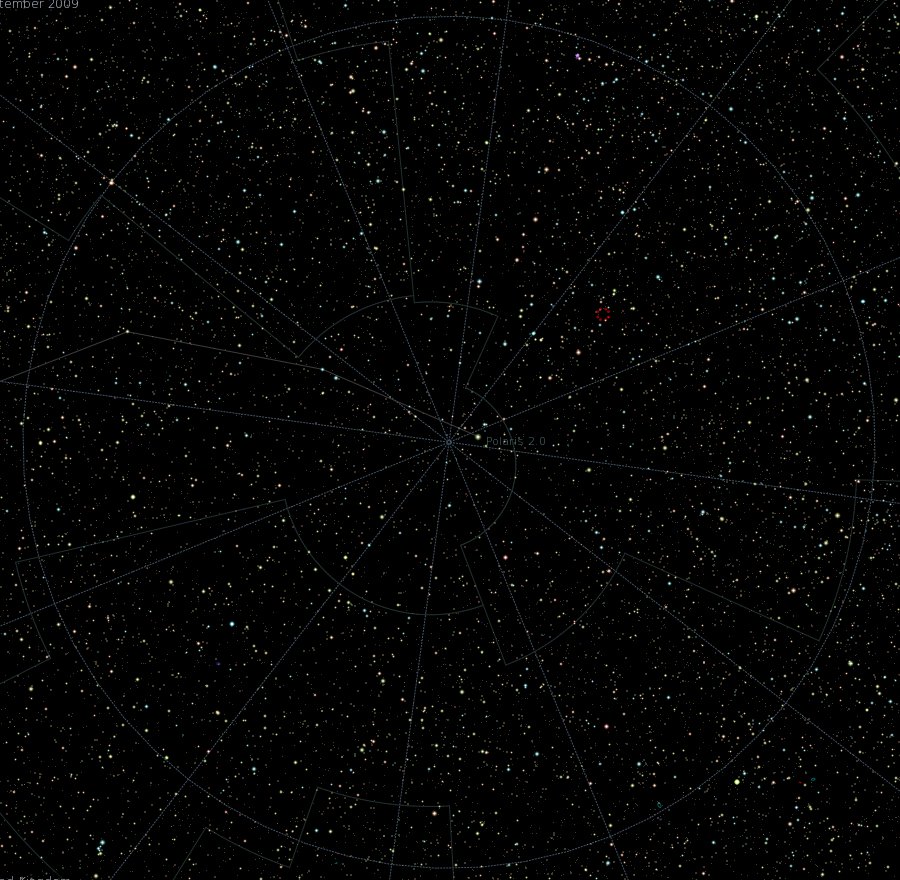

Stars in the PBT FoV

Each Starlight Xpress HXV-16 camera uses a Kodak KAI4021M CCD and a two stage Peltier cooler to reduce the detector temperature 10 degrees below ambient.

Each camera has a field of view some 10 degrees square. Each field is centered at a position spaced 120 degrees apart around a circle some 4 degrees from the North Celestial Pole, and aligned so that the rotation of the earth will bring a star across roughly the same part of each CCD camera. The cameras have distinct USB identifiers (126, 128 and 129), a feature originally intended to allow multiple cameras to be controlled from one PC.

The control computers for each camera are called, respectively, spoc1, spoc2 and spoc3 (spoc = Simon's POlar Camera). These computers may be controlled from anywhere in the Observatory using a remote desktop viewer such as vncviewer

During operation, the status of the spocs and recent images could be checked from the polar bear inspector

The observing procedure is designed to be entirely automatic:

The Starlight Xpress Cameras are switched on and off by a timeswitch in the camera control box. The switches need to be adjusted every month to minimise the time the cameras are running but to ensure that starry skies are recorded.

The cameras are read out using Starlight Xpress software. Every exposure is 30 seconds long. It takes 3 - 4 seconds to read out each image. The raw images are stored directly to a RAID array in the basement. The main folder is mounted as "/starlight" on arpc32 and arpc55.

Each camera writes out its images to a different folder, labelled /starlight/spoc1, /starlight/spoc2 and /starlight/spoc3

The filenames are IMGnnnnn.FIT, where nnnnnn is a sequential number which cycles back to 1 after 99999 images have been obtained. The images are in FITS format, the FITS headers contain rudimentary information including the exposure time and the time of observation.

Following any break in the power supply, the RCD socket and the timeswitch clocks in the rooftop box need to be checked and, if necessary, reset.

A TinyTag temperature and humidity monitor is mounted with in the control computer enclosure.

It records both quantities at preset regular intervals. Currently every 30 minutes, it has a capacity

to record for 340 days.

Values have been recorded from around 1 Oct 2012 to 6 Sep 2013.

The highest temperature recorded was 55 C on 12 July 2013.

These data have been saved and a new log started.

The logger was relaunched on 17 June 2014, and should run until 23 May 2015.

In order to avoid excess heating in the control computer ('''spoc''') cabinet, the '''spocs''' can be set to hibernate at dawn, and wake up again at dusk; the wakeup is achieved using WakeupOnStandBy. The wakeup time is set each night by a python script. The wakeup and sleep times for all three cameras are read from the file ''/starlight/pbt_timings'' which can be changed as required.

If any of the '''spoc'''s are not operating, and they are in hibernate mode, they can be woken up using one of the scripts ''/starlight/pbscripts/wolspoc[n].py'' (eg > python wolspoc1.py).

The images are read out using Starlight Xpress software (SXVHmfUSB - HX Camera Image). The camera software is automated by a python script using the [http://pywinauto.openqa.org/ pywinauto library], which interfaces with the Starlight GUI directly.

To start this software, it is necessary to "vnc" into each '''spoc''', and use the ''run'' command to start ''runstarlight.py''. In order for the automatic wake-up and pywinauto to work properly, the '''spoc'''s must be shut down cleanly by ''runstarlight'' (which use the times set in ''pbt_timings'').

If you start any of the '''spoc'''s during the day, then you should end up by running the ''runstarlight'' script. This will detect that it is daytime, hibernate the '''spoc''', and leave it to wake-up at the prescribed time.

In some cases, Starlight Xpress software appears to crash, and the errors are not currently trapped by ''runstarlight''. In this case the spocs do not shut down cleanly, and the process must be restarted manually. Observing time will be lost in this case. Current experience suggests that memory leaks and suspended programs can cause problems; we are trying to eliminate these glitches. Rebooting the spoc seems to help. ''In order to avoid these problems, '''spoc1''' runs continuously all year, and '''spoc2''' and '''spoc3''' are run continuously during winter months.''

Bias frames must be obtained separately by placing a light-tight cover over the cameras and exposing for 0.001 s. In principle, flat field frames could be acquired every night in twilight, but the current camera control software is not sufficiently versatile to pre-schedule exposures of variable length at pre-set times. For further details, see: http://wiki.arm.ac.uk/wiki/index.php/PBT_Calibrations

Exposures are taken continuously through the night. Currently, every exposure is 30 seconds long. It takes 3 - 4 seconds to read out each image. It should be possible to take a repeating sequence of exposures of different lengths, e.g. 2s, 10s, 20s... by adapting the current setup.

The raw images are stored directly to a RAID array in the basement. The main folder is mounted as ''/starlight'' on arpc32 and arpc55.

Each camera writes out its images to a different folder, labelled ''/starlight/spoc1'', ''/starlight/spoc2'' and ''/starlight/spoc3''

The filenames are IMGnnnnn.FIT, where nnnnnn is a sequential number which cycles back to 1 after 99999 images have been obtained. The images are in FITS format, the FITS headers contain rudimentary information including the exposure time and the time of observation.

A [http://arpc65.arm.ac.uk/polarbear/ single image from the previous night (spoc1, midnight)] is automatically copied and displayed. The [http://arpc65.arm.ac.uk/polarbear/show3.html midnight images from all three cameras] can also be inspected quickly to ensure that the systems is operating nominally.

Recent frames can be selected and examined individually using the [http://arpc65.arm.ac.uk/polarbear/frames-index.html image inspector].

Raw data are stored on RAID arrays. The physical devices and the names of their mount points on Observatory computers are given below. The data are sorted by date and camera. Data for a given calendar month are collected into a single subfolder. The plan was for raw data to be archived long term on RAID arrays. However the write - clean - reduce cycle inflicts a heavy load on the RAIDs - an 8Mb file is written every 12 seconds. Empty frames are deleted every day to conserve space.

2009/01 - 2009/12: raid5

Failed

2010/01 - 2010/10: raid2

raid2:/raid0/data/starlight/

arpc32:/raid2/starlight/

arpc55:/raid2/starlight/

Missing?

2010/10 - 2011/01: raid15

raid15:/raid0/data/starlight/

arpc32:/raid15/starlight

arpc55:/raid15/starlight

Failed 2013/12 - all disks replaced

2011/02 - 2013/11: snapserver

arpc32:/snap/starlight

arpc55:/snap/starlight

Writing became unstable 2013/11

2014/01 - : raid15

arpc55:/raid15/starlight

1 disk replaced 2014/03

fan replaced 2015/02

This is a stub. Need to add information about how and which times are recorded in image headers, and since when they have been valid. The spocs only had a proper time service since mid-October. The filenames produce from pbt_imgnane are from the file creation time on the RAID, The TIME-OBS from the FITS header are the start of exposure time (so there is a difference). Are we using UT or BST ?

15.01.2011 Serious issues exist regarding drifts and jumps between SPOC times and mean time in all the early data. It is not clear whether the issues are due entirely to the SPOC clocks, the RAID array clocks, or both.

The analysis is to compare the time given in the image FITS headers, which give the SPOC times for the start of each exposure, with the filename assigned by the script 'pbt_imgname', which gives the time at which each file is created on the RAID server, as defined by the clock on the RAID system. If all the clocks are correct, there should be a consistent difference of 37s between these two numbers, consisting of 30s for the exposure and 7s for the readout. The timing data were analysed by comparing the two times for one or more images per night over several months in 2010.

In general all of the SPOC clocks appear to run slow by a few seconds (7/3) per day which, if uncorrected, causes serious discrepancies over time. These were partially addressed by the inclusion of regular time service polling on the SPOC clocks at the start of 2010 December. By mid December the clocks were stable over a number of nights. However, there are sudden jumps every few nights that have not been explained, or corrected by the inclusion of time checks.

The assumption at present is that the RAID clocks are more reliable. However (see below) the introduction of a new data storage system in early 2011 has introduced a new unknown, since the specification for the time service on this system is not yet known. There has been no verification of the clock timing since that date.

The following is the summary of a study of SPOC frame timings up to March 2011. From the first analysed file on 05/01/2009 the SPOC and RAID times have drifted apart by about 3 seconds from night to night. This was determined to be the SPOC clocks falling behind. For the period from 05/01/2009 to 11/10/2010 (inc.) the RAID times are believed to be accurate.

When the RAID changed on 12/10/2010 then the RAID times became unreliable. The systematic falling behind of the SPOCs is still present here but there is an additional initial offset due to the RAID and an additional systematic separation in times due to the RAID. The RAID appears to move ahead of the SPOCS.

On 24/11/2010 the RAID system had its clock updated and began receiving ‘nttp’ updates. The RAID times are considered accurate from this date forward to the present day. The SPOC clocks still appear to fall behind and occasionally appear to be ‘corrected’ as the gap between the times is reduced, only to steadily increase in size as before.

On 11/01/11 the SPOC clocks are updated and the difference in times become the expected ~35s. This lasts until 21/01/11 at which time the larger time gap reappears. There is a gap in the available data during February. The time gap remains at about 120s in 03/2011.

Use the RAID times for data up to 11/10/2010.

Some sort of ‘real’ time could be calculated from the inaccurate SPOC and RAID times given the offsets and gradients. This applies from 12/10/2010 to 23/11/2010 (inc.).

RAID times should be used from 24/11/2010 to the present day.

''SPOC times should be looked at as soon as possible.'' They appear to be dodgy now.

31.05.2011. A new terabyte data storage system was purchased and installed in 2011 February, replacing the previous RAID arrays. Issues with SPOCs writing directly to the SNAPserver were encountered early on, and fixed. However, the links are very fragile so that the SPOCs have a tendency to lose the mount points for the SNAPserver from time to time. SPOCs and cameras appear to function normally, but data is not being saved.

30.01.2012. As the system ages, we are anticipating major system failures. Please log major anomalies here.

30.01.2012. spoc1 hung during the night. Rebooted. Seems ok.

26.03.2012. Power outage on 18.03 left entire spocbox dead. Reset trip switch on main power supply in the spocbox. Rebooted all spocs. Netgear router apparently failed. Replaced and all systems recovered. Subsequent inspection -- router seems ok.

16.06.2012. spoc1 and spoc2 failed.

18.06.2012. Rebooted spoc1, switched off spoc2 and spoc3. spoc1 not responding. Temperature ?

21.06.2012. Powered up all spocs. Only spoc3 responding. Check spocs1 and 2.

27.07.2012. spoc3 not responding. ''Removed all spocs from spocbox for testing.''

16.08.2012. All spocs tested and replaced in spocbox. Normal operations resumed.

01.10.2012. A TinyTag temperature and humidity logger has been installed in the spocbox. It is wired to spoc1 and records every 30 minutes. It should be monitored closely in high temperature periods, in an effort to avoid overheating the spocs.

15.11.2012. The RAID array was filling up with data. HMM built a new partition for spoc data, and the spocs were reconfigured to write to the new partition.

05.12.2012. The spoc inspector shows NO images in the current spoc arrays.

01.03.2014. RAIDs failing again. Replaced see log above.

17.06.2014. The spoc inspectors are all STILL pointing to the wrong disk.

14.10.2014. There was a major server failure on 5.10.2014 which broke links to all disks. spocs rebooted on 14.10.2014. 10 days probably lost. spoc2 was not recording through september.

11.2014 -- 02.2015. Continuing problems with systems.

One spoc had stopped. All spocs were reconditioned.

Camera window heaters had failed. Repaired.

spoc1 and spoc2 could not see their attached cameras ... fault pending

RAID15 fan failure ... replaced

Every morning two jobs run which process all the image files contained in folders /starlight/spoc[n].

pbt_imgname converts each filename from a sequential number to a name including the camera identifier and the date and time of the start of exposure, as given by the file creation time. pbt_clean inspects each image using sextractor. If < 200 stars are detected, the file is moved to a bucket. The bucket is emptied 24 hours later. If > 200 stars are detected the file is moved to a folder created for that date and camera. pbt_phot carries out the primary tasks of fixing every fits file, subtracting a bias frame, and dividing by a normalised flat field. It subsequently inspects each image and, if > 500 stars are present, proceeds to compute an astrometric solution using solve_field. Photometry is extracted for every star on the image using sextractator . This script is run once per day and per camera, but can be run from another script in order to loop over days and cameras, e.g. to process one month's data at a time. Further information can be found at:

See also a report by

The primary objective is to extract the lightcurve for every star within the PBT field of view, and to explore it for evidence of periodic or other variability. The principle steps in this procedure are to:

See also a report by Rachel Johnston

Fireball observed with Polar Bear Telescope

At around 01:00 on 2009, November 22, the Armagh Observatory's meteor camera system detected a bright fireball near the north celestial pole. Investigation

of Polar Bear frames at the time showed that it crossed the PBT FoV and was recorded by one of the cameras. The smoke trail left behind continued to be visible

for several minutes. The dispersal of the trail indicates the speed and turbulence of the upper atmosphere.

Fireball movie (frame rate approx one every 34 seconds)

Further information: Press Release missing